2 min read

Autonomy is the Future of the Automotive World

How the Autonomous Electric Vehicle Bootcamp is propelling the automotive industry with hands-on-training Today’s job market looks more different...

Unlock Engineering Insights: Explore Our Technical Articles Now!

Discover a Wealth of Knowledge – Browse Our eBooks, Whitepapers, and More!

Stay Informed and Inspired – View Our Webinars and Videos Today!

Exploring the future of software-defined vehicles through expert insights.

We are in a period of transition. As self-driving cars become more common, drivers must adjust their driving to the capabilities of the vehicle. These capabilities offer significant safety improvements, but they also impact how many safety burdens remain with the driver and require the driver to adapt. One human might adapt readily, while the next person might struggle to adapt, or even be hesitant to try.

In this second article in a 3-part series, we discuss some of the human considerations and dynamics, and some of the technology and methods that might be employed to solve these challenges.

I think about a few of the early demos that I've seen, of some autonomous Class 8 trucks. When they demo, they are so timid in terms of the way they are driving. They move very slowly, and they slowly go around corners. I know why that is, because the developers don't yet have a lot of confidence that they can maneuver the vehicle at high levels of acceleration, either laterally or otherwise. And the vehicles themselves probably don't have confidence in their surroundings. They have got to keep measuring and making sure of what they think is there, ensuring that they are measuring the right thing and that they are on the right road. And probably, they also must give the spotter driver some time to react to things that may go wrong. However, it is not good enough just to have a self-driving car that can slowly proceed on its path. It must also be at least as good as a good driver, and all those things become easier if the surrounding infrastructure helps to support it.

I was in a meeting with one of the companies that supplies yard spotter tractors to big ports and terminals. They've deployed some self-driving cars technology. The feedback was that the autonomous spotters were too slow. To be economically viable, an autonomous spotter must be at least as fast as a good driver, because each spotter is part of a flowing system. If one spotter is slow, it slows down everyone. They had a video of the vehicle maneuvering and it was doing an okay job. But whenever there was any kind of question or something crossing its path, it would just stop, wait for it to clear, and then move on. Well, the port or distribution center is all about throughput. If an autonomous spotter slows down overall throughput, it is not going to add value even though you don't have to pay someone to drive it.

You get a team of good yard spotters, and they start to know each other’s moves and anticipate what they're going to do. They not only look at the vehicle and look at the way the wheels are turned, but they also recognize the driver. They know that's Bob over there, and Bob tends to drive a certain way. There is a rhythm and tempo to the way Bob does his job. Bob meshes with the system, and the system meshes with Bob. I have also learned Bob's body language. Those are all sensor points. We're all inputs, very complex, subtle inputs, and that is very hard to duplicate in a software environment. Yet, we will have to write software to do that. And that's where AI comes in.

A couple of years ago, I was driving up to Michigan Tech to attend a seminar on safety for autonomous systems. As I was driving, I was thinking about, okay, what do I have to do to drive this trip safely? What would an autonomous system have to do in order to duplicate what I'm doing now?

I was in the slow lane, and I noticed a guy going slower ahead of me. And so, I pull over to pass and I start passing several cars. I'm looking at their heads… are they looking in their mirror? I'm trying to anticipate if they're going to come over into the passing lane while checking to see if someone else is coming up behind me. Because sometimes, there is a slow truck in the slow lane up ahead, and you don’t become aware of it until you move over to pass. I know that some of the people still in the slow lane might want to get out from behind the slow traffic early. Are they ready to make the move? Should I pass? You know, decisions like that. It's those subtle things that you don't even consciously think about anymore, you just do them as an experienced driver. You're trying to anticipate what the other drivers are doing.

It’s going to be very tough to duplicate that in software.

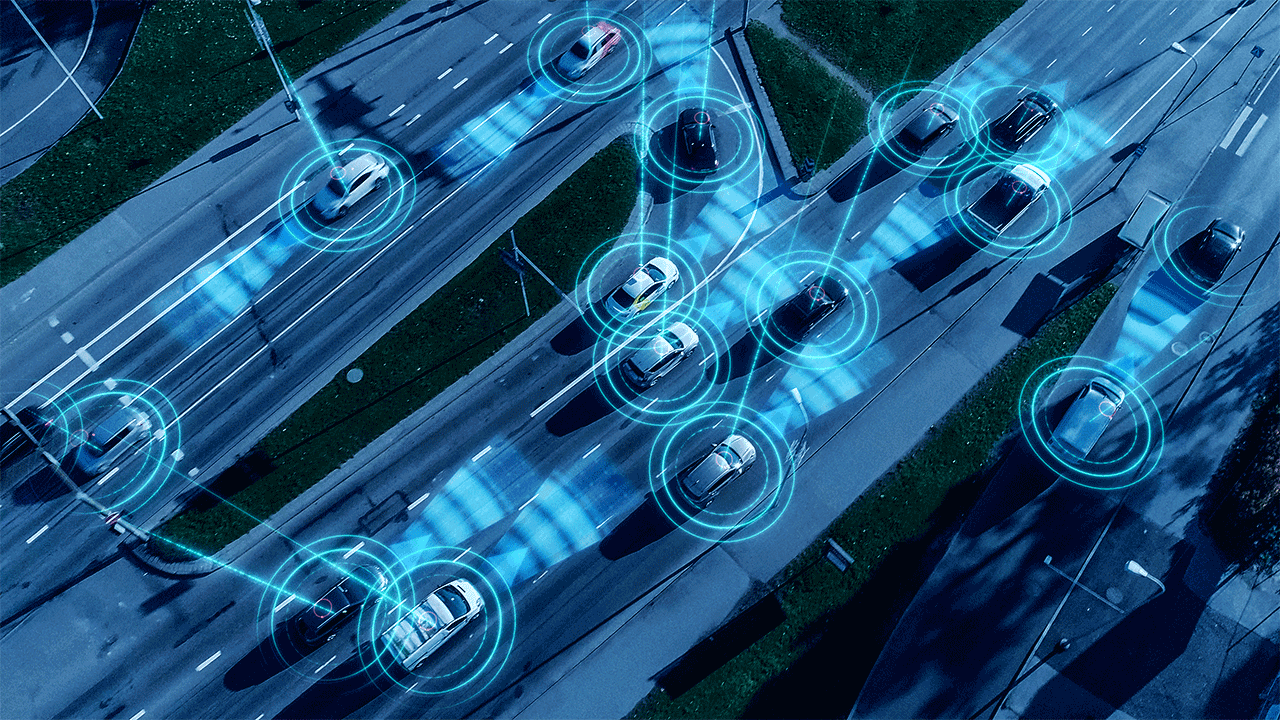

That's where the vehicle-to-vehicle comes in, where the other vehicles are communicating their intent, the next 15 seconds or whatever the optimal interval is determined to be. That would help a lot, and I think that kind of communication would probably be the answer to that problem, as it would eliminate so much of the guesswork.

The challenge will be the interim phase, when we have a mixture of old vehicles and new self-driving cars sharing the same roads. The new autonomous vehicles, left to themselves, can probably do a really good job. But when you also have to deal with, say, a 20-year-old car, or even worse, a 30-year-old pickup with a loose load bouncing around in the bed, it becomes significantly more challenging for self-driving cars. There doesn’t have to be anything wrong with the older vehicles, but they won't have any of the features that enable them to safely integrate with the autonomous system. It won’t be one system; it will be two systems sharing the same space at the same time, every movement reactive. It will be humans reacting to autonomous vehicles, and the autonomous vehicles talking to each other, but not anyone else. The autonomous vehicles will know what the other autonomous vehicles plan to do, but they will only be guessing in anticipation of what the older vehicles might or might not do. Very reactive, very complex. That is going to be the greatest challenge, that interim period of time where we transition from fully human operated to fully autonomous.

It will be imperative for the automotive industry to develop and achieve consensus on a robust sensor set that represents the minimum set of sensing capability required for an autonomous vehicle to do a good job with autonomous perception and sensing. Take an example of Tesla, who is using primarily cameras for their assessment of the road. Based on some of the incidents they've had, an argument can be made that it's not sufficient.

And some of the other car companies, you can read some of the statements they've made stating that they really need LIDAR for range detection and object detection. Because although cameras can do pretty good job, there's scenarios where they don't perform well, such as bad weather or twilight, or being pointed directly into the rising or setting sun. So, we can't totally rely on them.

When a set of sensors does become standardized, it becomes a common baseline to work from. Then you can concentrate on other parts, such as the controls and the path planning, with more confidence.

It seems like right now the industry is still trying to assess what that minimum sensor set is. And of course, LIDAR is expensive, but the price is probably going to come down as production ramps up and economies of scale take effect. And so today, the sensing set still needs further development, and in particular, capability must be increased at a reasonable cost. There are impressive sensors in some of the aerospace and military type environments, but they tend to be extremely expensive. It does little good for the technology to exist if no one can afford to use it.

To increase the overall sensor system capability, you can either improve the capability of the sensors, or use more of them. The final answer might end up being a combination of both. Adding more sensors is enabled by low cost. Even though some of the LIDAR systems (and especially the rotating LIDAR systems) are very, very capable, they're also very expensive. If they could be produced at 1/20th or 1/100th the cost, and you can add four or five of them in one line, rather than just one, that might be a good solution. And so, some of the solid-state LIDAR technology currently in development might be something that becomes a big enabler to advancing the sensors.

Redundancy is also a strategy for robustness. Even though the sensors aren't perfect with, say, just three of them, a good assessment can be made as to whether the system is capable. Using just two of them degrades the trustworthiness of the overall system, because if one goes out, you don't know which one has gone out and which one is good. You don't know which one to trust. However, if the cost is low enough, then you can add more sensors and get some confidence in your measurements.

The number of lines of code continues to grow. However, the development environments continue to get more efficient. Things like auto co-generation, and model-based development environments, where you can work at higher levels for defining the software in what eventually becomes the system software, are not necessarily working strictly in the software domain, although that will still be necessary. But as a system developer or a system and controls developer, you want to work at a high level. How is this function supposed to work?

I believe the modeling tools, and controls and system development tools, will allow the increased complexity to be manageable. It's still a challenging environment. And the simulation tools such as Simulink have advanced a lot. And they are incorporating a lot of the features to do things like generate scenarios for autonomous controls technologies and sensing technologies. But it will still be a challenge. I don't know that lines of code are a very good metric anymore, if you are developing in a different space, such as a model-based development environment. We don't count object instructions anymore.

One possible hurdle might simply be the energy needed to do all these things. If this technology becomes available to all at a reasonable cost, I wonder about the energy required to accomplish all of it. All these things that will further tax what is still a road-based infrastructure… In the long term, is that the right direction from an energy perspective?

There's just no way around it, you must have energy in order to move things. It can be done more efficiently, but still, some form of energy is a fundamental need that won't go away and will never go away. So, that will be an interesting challenge. The electricity will have to come from somewhere, but how will it be generated? What will be the impacts of generating the power, manufacturing the technology, using it, and disposing it at the end of its useful life? These are questions worth consideration.

Mass transit continues to be a part of the conversation, but we as a people like the freedom of controlling our own timing. For big cities, maybe an either/or equation is not the right way to look at it, even with autonomy factored in. There are a lot of things you can do to make traffic more efficient once your existing roadways are populated with coordinated self-driving cars that obey the traffic regulations, and for the most part, don't crash. But ultimately, I wonder about the things like the overall infrastructure on the ground; maybe it is a bigger overall transportation question.

A lot of the car-oriented infrastructure that exists today is a result of the structures that were required to support the original incarnation of the automotive space. A car is going to have axles and typically, four wheels. But when you look at the design requirements for a fully autonomous vehicle, some of the traditional provisions for operating the vehicle might no longer have to be there.

The configuration of the cabin can evolve. You might no longer need forward-facing seats. And you can have more flexibility in the cargo space. Obviously, the traditional provisions for controlling the vehicle such as gear-shifting, pedals, and steering wheels, all take up space. But if the occupants are no longer required to actively drive the vehicle, that space could be utilized for other amenities within the car. That might be a good thing to think about, especially as the industry seeks adoption and acceptance.

A self-driving car must have some of the same basic provisions as a traditional car, such as shelter from the elements, heating and cooling, and a nice comfortable place to sit. Hopefully, it's very quiet. But maybe it's also a place to work. I mean, you're traveling, and you can have provisions, obviously connectivity, but you might also need a comfortable place to work and communicate with colleagues. If you're not driving, then you can also enjoy yourself more with infotainment, movies, and options for relaxation.

These alternatives may become more important to the buyer once they are no longer required to pay attention to what's outside of the car. Ultimately, a person will have to choose whether they are an operator or a passenger. Striking the balance between being an engaged operator and a passive passenger, and being able to transition smoothly between the two, might turn out to be one of the most significant challenges of all.

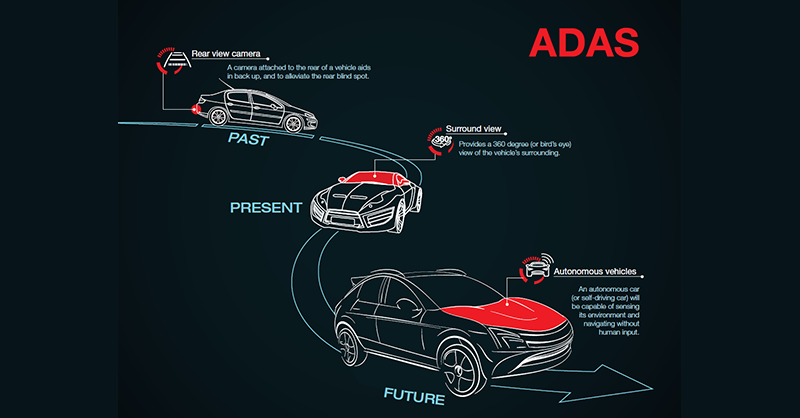

What the future holds for ADAS control systems

2 min read

How the Autonomous Electric Vehicle Bootcamp is propelling the automotive industry with hands-on-training Today’s job market looks more different...

What is the Blueprint for Advancing Autonomous Mobility?

Read More

Read More