Automotive Functional Safety and Cybersecurity Platform

LHP will be at the 2018 IoT Solutions World Congress Showcasing Embedded Cybersecurity On a Vehicle Platform

7 min read

Ashutosh Chandel

:

Aug 7, 2023 10:30:00 AM

Ashutosh Chandel

:

Aug 7, 2023 10:30:00 AM

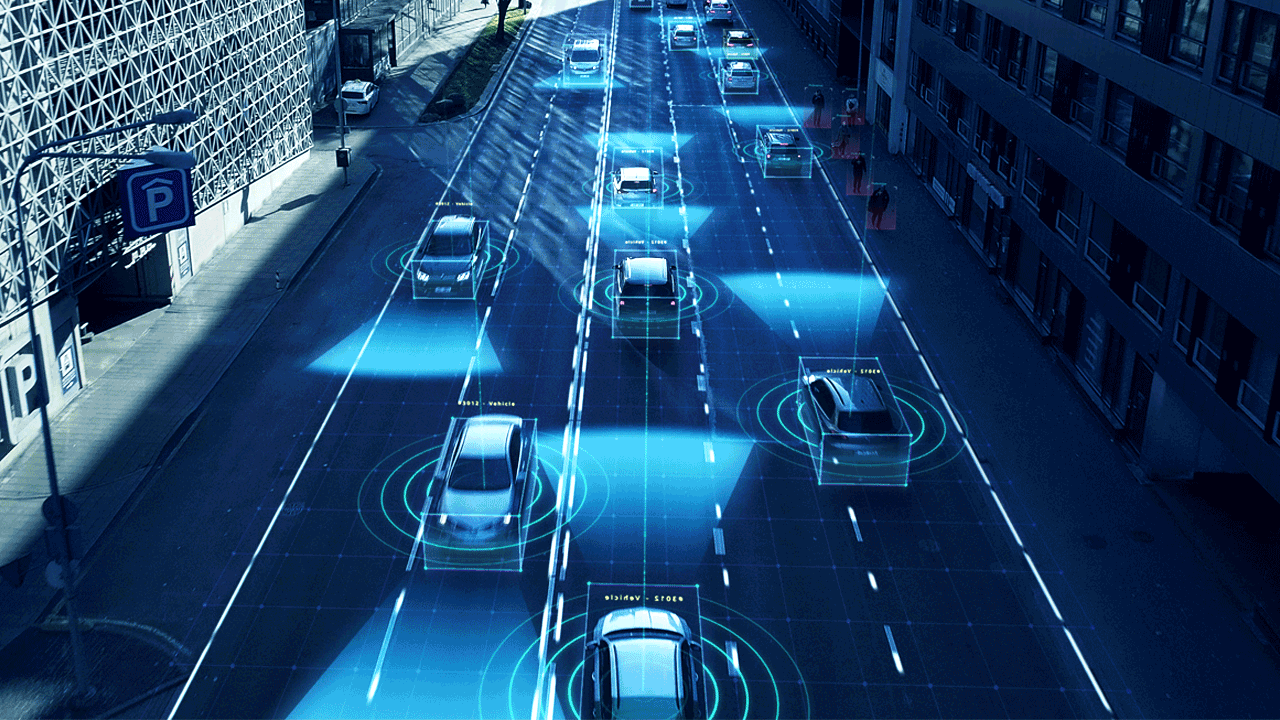

In every era, automotive manufacturing has always had some degree of innovation. Automotive design was born in innovation, the idea of taking the horse off the cart, and building a machine capable of going farther and faster than had ever been possible before. Every generation has brought some new improvement to the design and manufacture of automobiles since then, but we’re currently in an era of great upheaval in the automotive world.

Today, there is change happening that consumers can more easily see and appreciate, compared to the innovations of the late 1990s through early 2000s, which were less visible to the public. Consumers sometimes found new vehicles to be disappointingly similar from one year to the next, with perhaps just an added feature or a new switch on the dash. The real innovations in that era were less visible to consumers and operated more in the background. They increased reliability and improved fuel economy and emissions performance to new standards.

The industry is still reaping rewards from the lessons learned in those years, but the vehicles produced then did not always inspire awe. However, starting around 2008, with the release of Tesla’s first production vehicle, the focus in this industry began to shift. Slowly at first, and by now more rapidly, the industry has begun to focus more on the user experience. Autonomous vehicle (AV) operation and artificial intelligence (AI) both play a large part in this new industry focus. Now, only 15 years later, we say that every new truck or car produced is a software-defined vehicle (SDV).

Here are some ways that the entwined futures of AI and AVs will impact software-defined vehicles and the automotive manufacturing industry in the years to come.

While artificial intelligence is early in its development, there are still specifics to understand. The AI that would be required to operate a fully autonomous Level 5 vehicle, for example, is not going to be the same type of AI that is currently required in a lane-keeping feature or to plan a route on a navigation system. But those AI systems have some things in common.

The race to full vehicle autonomy has slowed in its pace somewhat, due to reaching certain technical limits, for example, inaccurate mapping and vehicle-support infrastructure. Also, some in the automotive industry have begun to re-align their organizations in response to the success of the Tesla vertical integration model, perceiving the need to control the technology on their products more closely. And many have begun to expect news of possible regulatory controls of both AV and AI technology.

However, the race to include partial autonomous functions is going faster than ever. Tesla’s first production car, the Roadster, was a fairly simple battery electric vehicle (BEV) by our standards today. The Roadster was introduced in 2008 and was built on a Lotus chassis. It boasted a range of 393 km (244 miles) per charge. Some consumers found its advertised 4-second 0-60 mph times more exciting, speaking of innovations in user experience. Tesla didn’t offer the Autopilot feature on certain models until 2014, though they were the only manufacturer to have anything like it – then. As of early 2023, most if not all major automakers offer advanced driver assistance (ADAS) features on numerous models with at least the same, if not more, sophistication than the original Autopilot, or even the current iteration of Enhanced Autopilot[2].

What is meant by partial autonomous functions? SAE International has defined and broken out autonomous driving operations into 6 levels, from Level 0 to Level 5. The SAE J3016[3] levels are:

It’s easy to see that the competition and innovation occurring around features at the lower levels, like 2, 2+, and 3, are building a scaffolding of proven technology and lessons learned, upon which the Level 4 (and eventually Level 5) autonomous functions will be able to stand much more firmly.

Artificial intelligence is going to continue showing up in surprising places within newer model SDVs, and not only those we consider “autonomous.” As demonstrated above, the term “autonomous vehicle” itself has become a bit of a moving target, as so many new vehicles now come with at least Level 1 and often Level 2 (or higher) autonomous vehicle functions, but are certainly not fully autonomous. It is probably fair to say that most SDVs will have at least some autonomous function because, in truth, that’s what ADAS features are.

Additionally, more of the original equipment manufacturers (OEMs) look to be able to use over-the-air (OTA) updates to add features to their vehicles after the sale (Tesla demonstrated the power of this approach by adding Autopilot to vehicles once it became available). These features, in addition to virtual assistants integral to the vehicle’s operating system (OS), or customizable features like image analysis to suggest optimal seat and mirror positions, are examples of AI that enhances the user experience and will become more common in future vehicles. While these features promise to be popular with consumers and will enhance the user experience, they also will not allow either any AI or the operator to alter safety-critical system operation, and thus safeguard the functional safety elements.

The inclusion of AI in more safety-critical systems on a software-defined vehicle raises not only functional or technical questions, but ethical and certainly, legal ones, as well. Some of these might not ever have a definite answer, but OEMs, suppliers, their legal teams, and consumers are all going to be affected by them or encounter situations requiring some attempt to answer them. Additionally, in manufacturing, we know that AI involved with any safety-critical system must be approached very carefully from a functional safety standpoint.

If we consider that AI is defined by its ability to learn, to alter its own code in response to analyzing input or feedback, then at least in some situations we are all teaching it. But what happens if it learns the “wrong” thing? Who is responsible if an error made by an AI system results in an accident? Narrow AI, at least, is not sentient and cannot feel remorse for its actions, and is, after all, just a program. How would an insurance company establish the risk involved in insuring a Level 5 autonomous car when no one can predict what the vehicle’s AI will “learn?” At least some of these questions currently have no good answer, and this state is another reason why full autonomous driving has lost some of its momentum.

Machine learning, though it is not the same as human learning, still occurs in even the most narrow-focus AI. This is a defining characteristic of artificial intelligence. Some industries using AI (or some uses for AI in automotive) can constrain the AI, or artificially re-model what it has learned in order to keep its focus on fixed parameters. The reason for AI governance is that, because of machine learning, AI code is dynamic. For example, Stephanie Zhang, head of ModelOps at JPMorgan Chase, speaks to this in an MIT interview:

“We all know that AI model development is not like code software development where if you don't change the code, nothing really changes, but AI models are driven by data. So as the data and environment change, it requires us to constantly monitor the model's performance to ensure the model is not drifting out of what we intended. So, the continuous compliance requires that AI models are constantly being monitored and updated to reflect the changes that we observe in the environment to ensure that it still complies to the regulatory requirements. As we know, more and more regulatory rules are coming across the world in the space of using data and using AI[4].”

JPMorgan Chase’s customer needs are important, but their product is not classed as safety-critical and subject to the ISO 26262 standards like a software-defined vehicle with autonomous features would be. Therefore, should AI become fully active in any automotive setting where it controls safety-critical functions and its governance; constraint to narrow task parameters should be a primary concern.

The idea of dynamic code presents multiple issues for automotive software developers, as the technology continues to move forward. The industry at large is still finding ways to understand this reality. There is not currently a good way to validate code that is not static, which might change daily or even more frequently. Additionally, in terms of functional safety, consider a person who purchases a vehicle. At some point, the AI in the vehicle’s smart cruise control system updates its code. That cruise control system, and its operation in conjunction with the brake controller, should all be tested before that particular and individual vehicle can be driven because the code affecting a safety critical system has been changed. But again, whose responsibility is it to test this device every time it updates? The car’s owner probably cannot. The OEM, the supplier, and possibly the dealer, may have the means but certainly not the ability to do this on millions of vehicles updating individually and daily. This presents another quite delicate issue at the intersection of technology and ethics, which will require time and multiple test cases to fully solve.

Autonomous vehicles and artificial intelligence are the current darlings of the media, an automotive innovation power couple whose combined promise of greater safety and efficiency, transportation access, and personalized user experience is intoxicating. Like many human celebrities, they present their share of ethical and legal challenges. The automotive manufacturing industry as a whole faces a period of massive reorganization in response to the disruption these two technologies, in particular, bring with them. Once these challenges are met, these two technologies will drastically change the landscape of automotive manufacturing, and, ultimately, worldwide cultural ideas of mobility.

LHP will be at the 2018 IoT Solutions World Congress Showcasing Embedded Cybersecurity On a Vehicle Platform

Powered by: LHP Engineering Solutions, AASA Incorporated, National Instruments, and PTC LHP Engineering Solutions, an engineering services provider...

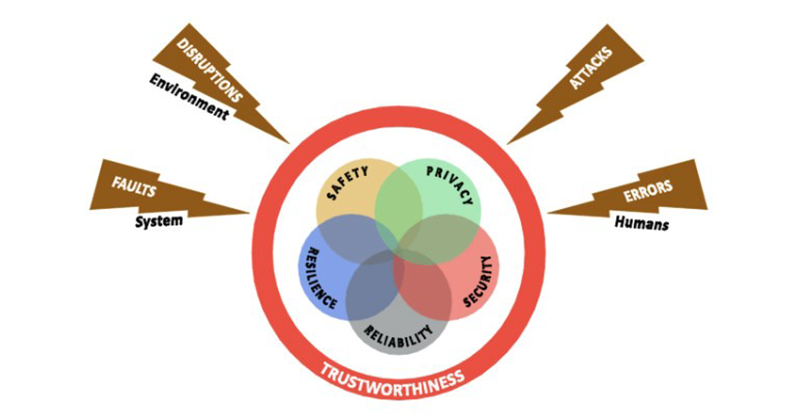

When contending with the complicated and interconnected devices of the Industrial Internet of Things (IIoT), the question of trustworthiness is...